Dennis Wood

Senior Member

With most NAS units online 24/7, there is a very compelling argument to be able to use them to host virtual machines.

For many outside of the IT world, the term "virtual machine" may not mean a whole lot, however the concept is fairly simple. Most of us know that the majority of time on small servers etc, the CPU is idle. Regardless, in the past, most would build up multiple physical computers for different tasks. Most recent processors have a virtualization feature set: http://ark.intel.com/Products/VirtualizationTechnology that allows one CPU to host multiple operating systems simultaneously. This means that you can run Linux, Windows, Ubuntu Server etc., all on the same computer, and all fully functioning, simultaneously.

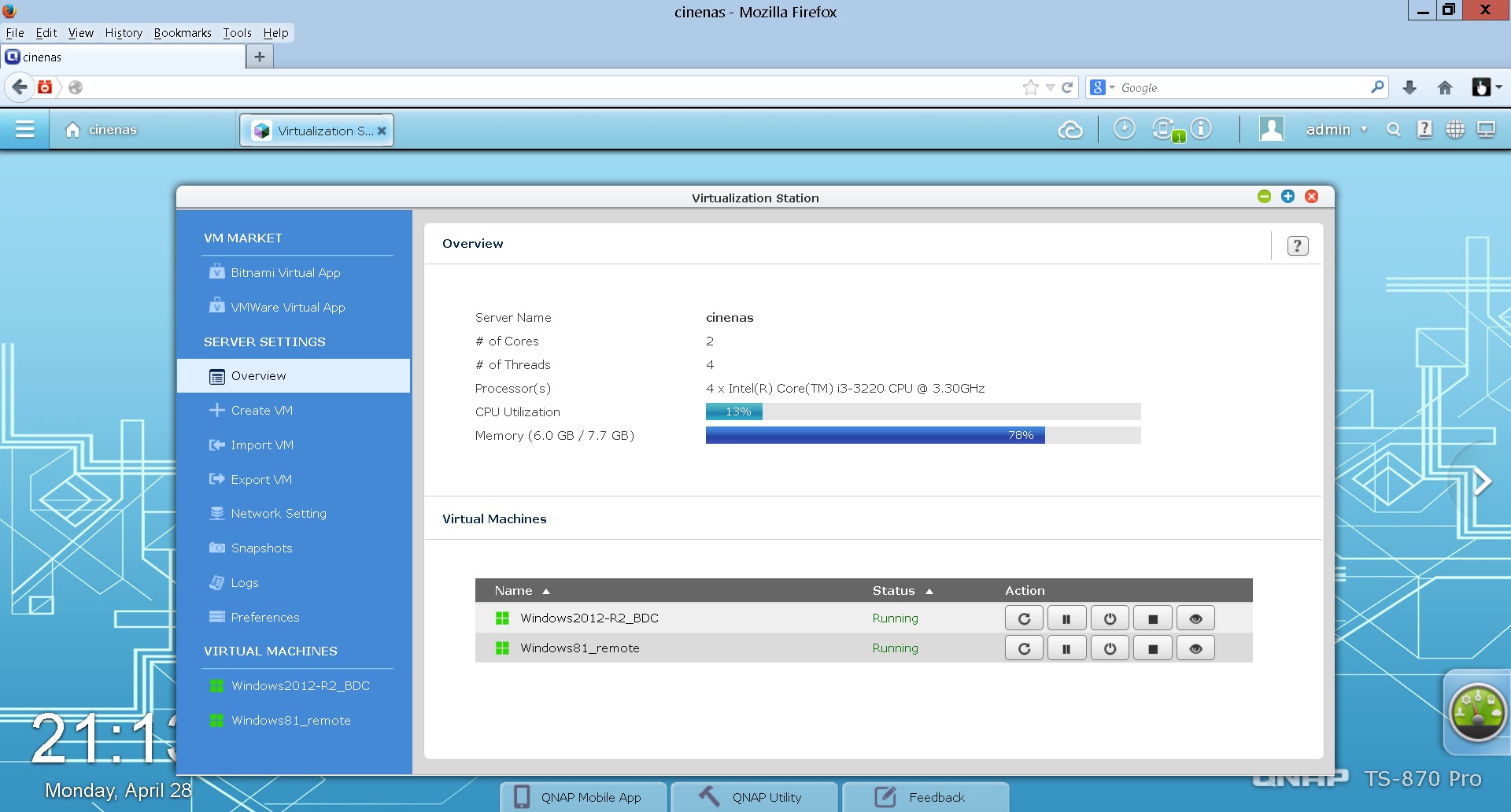

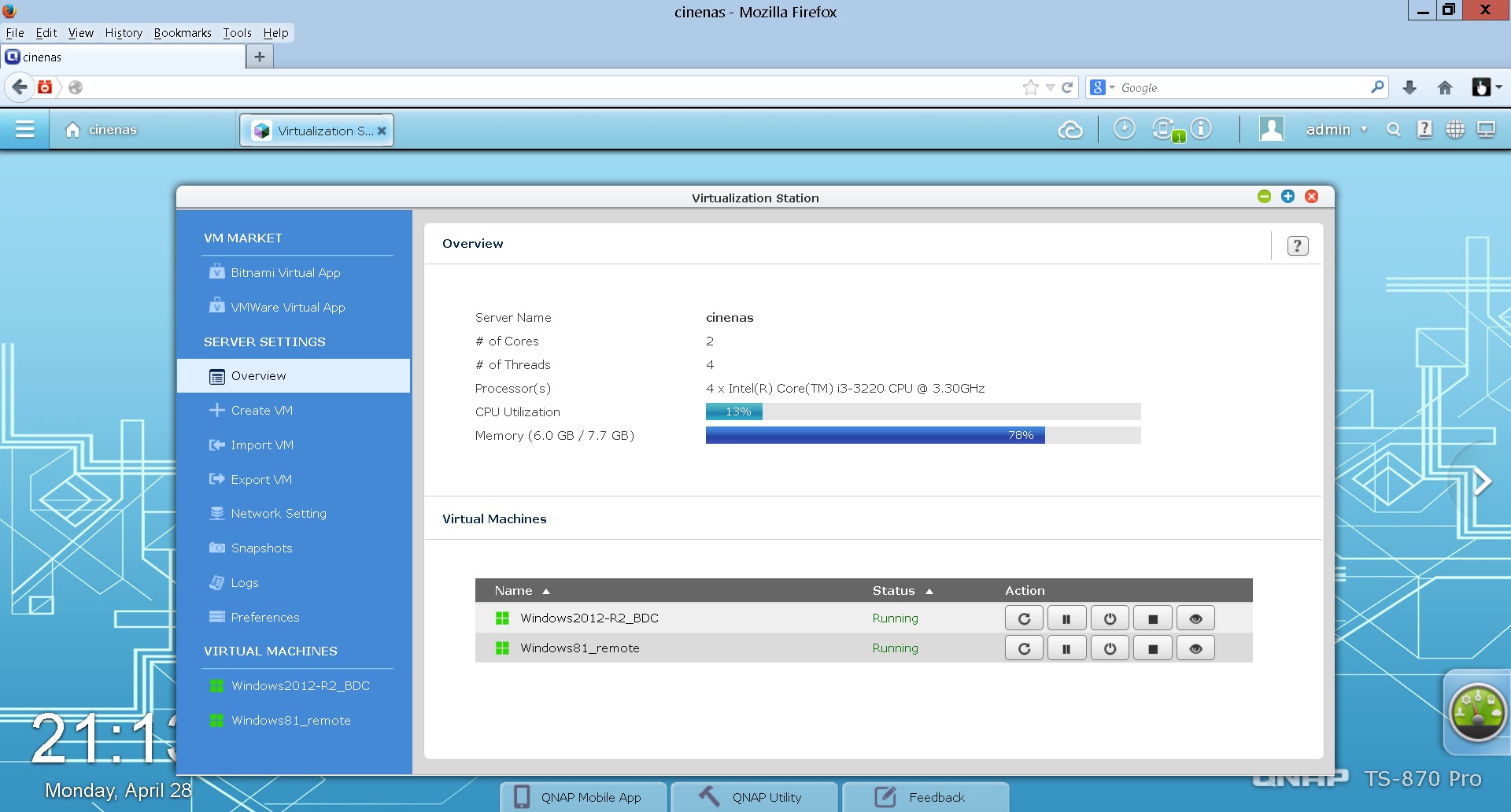

Some of the Qnap NAS units use processors that support virtualization. They have made me a happy guy by offering a very slick solution, the "Virtualization Station" (QTS 4.1) that makes it very simple to set up and maintain virtual machines. You can see below that we're running a 2012 Server R2 (backup domain controller) as well as a windows 8.1 workstation. This configuration removes the need for us to build up a separate server, and allows us to power down a remote access machine that used to run 24/7. Essentially we're running a NAS, Windows server, and workstation using essentially the same power footprint as the single NAS.

What do you need to set up your own?

1. The Virtualization Station will not let you add a VM until you add RAM to the NAS. On the Qnap TS-470 Pro, or TS-870 Pro, these are DDR3 1333 SODIMM cards..about $35 for 4GB. These NAS units (which use an i3 processor) ship with 2x 1GB SODIMMs, so you'll need to remove/replace 1 (or both) SODIMMs to add more RAM. On these particular NAS units the RAM looks to be impossible to get at without major disassembly, however it's actually easy. Remove Drives 1, 2 and 3 as well as the NAS case cover. Remove the ATX power plug. You'll see that you can sneak the RAM in with one hand coming in from the top of the NAS, and the other reaching into drive bay 1. I ended up removed the 2 x 1GB SODIMMS, and replacing with 2 x 4GB SODIMMS for a total of 8GB of RAM.

2. You'll need a .iso image of your OS installation disk somewhere on the NAS (which you will point the wizard to) during creation of your virtual machine. Once the VM is created (about 20 seconds), the OS installation will start once you "power on" the virtual machine. Alternatively you can create a VM image from an existing physical workstation and import it.

3. At least one of the NAS LAN ports will be dedicated to the virtual machine when you create it. If you create additional VMs you can either use that one LAN connection for all of them, or add dedicated ports if you have enough to spare We are using the 1GbE ports for the two VMs, and using added 10GbE ports for the NAS file traffic.

We are using the 1GbE ports for the two VMs, and using added 10GbE ports for the NAS file traffic.

4. You can access your VM either from the console that is built in, or windows remote desktop etc. as appropriate. Either way the virtual machine behaves exactly like a discrete computer, so once installed, is administered exactly as you might think.

So there you go. Easy Peasy.

QNAP's Virtualization Station tutorial: http://www.qnap.com/useng/index.php?lang=en-us&sn=9595

Cheers,

Dennis.

For many outside of the IT world, the term "virtual machine" may not mean a whole lot, however the concept is fairly simple. Most of us know that the majority of time on small servers etc, the CPU is idle. Regardless, in the past, most would build up multiple physical computers for different tasks. Most recent processors have a virtualization feature set: http://ark.intel.com/Products/VirtualizationTechnology that allows one CPU to host multiple operating systems simultaneously. This means that you can run Linux, Windows, Ubuntu Server etc., all on the same computer, and all fully functioning, simultaneously.

Some of the Qnap NAS units use processors that support virtualization. They have made me a happy guy by offering a very slick solution, the "Virtualization Station" (QTS 4.1) that makes it very simple to set up and maintain virtual machines. You can see below that we're running a 2012 Server R2 (backup domain controller) as well as a windows 8.1 workstation. This configuration removes the need for us to build up a separate server, and allows us to power down a remote access machine that used to run 24/7. Essentially we're running a NAS, Windows server, and workstation using essentially the same power footprint as the single NAS.

What do you need to set up your own?

1. The Virtualization Station will not let you add a VM until you add RAM to the NAS. On the Qnap TS-470 Pro, or TS-870 Pro, these are DDR3 1333 SODIMM cards..about $35 for 4GB. These NAS units (which use an i3 processor) ship with 2x 1GB SODIMMs, so you'll need to remove/replace 1 (or both) SODIMMs to add more RAM. On these particular NAS units the RAM looks to be impossible to get at without major disassembly, however it's actually easy. Remove Drives 1, 2 and 3 as well as the NAS case cover. Remove the ATX power plug. You'll see that you can sneak the RAM in with one hand coming in from the top of the NAS, and the other reaching into drive bay 1. I ended up removed the 2 x 1GB SODIMMS, and replacing with 2 x 4GB SODIMMS for a total of 8GB of RAM.

2. You'll need a .iso image of your OS installation disk somewhere on the NAS (which you will point the wizard to) during creation of your virtual machine. Once the VM is created (about 20 seconds), the OS installation will start once you "power on" the virtual machine. Alternatively you can create a VM image from an existing physical workstation and import it.

3. At least one of the NAS LAN ports will be dedicated to the virtual machine when you create it. If you create additional VMs you can either use that one LAN connection for all of them, or add dedicated ports if you have enough to spare

4. You can access your VM either from the console that is built in, or windows remote desktop etc. as appropriate. Either way the virtual machine behaves exactly like a discrete computer, so once installed, is administered exactly as you might think.

So there you go. Easy Peasy.

QNAP's Virtualization Station tutorial: http://www.qnap.com/useng/index.php?lang=en-us&sn=9595

Cheers,

Dennis.