You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Out of Memory Errors 388.1 / No space left on device [SOLUTIONS]

- Thread starter jtp10181

- Start date

Thanks again for taking the time to help and share this info.You could probably add the VACUUM command to the script to shrink the file sizes when you run it, but that's outside of my use case.

You'd add something like/usr/sbin/sqlite3 <database file> 'vacuum;'after each of the DELETE commands to the script

@jata - when I initially wrote the script, it was with the intent that it would be a one-off. When I found that the shell on the router didn't work with the

Since it looks like others may use this, I've cleaned the script up and added the

array() object, I just copied/pasted instead of dealing with the research for arrays.Since it looks like others may use this, I've cleaned the script up and added the

vacuum command to it. Here is the latest iteration to the clean-dbs.sh script. This will output to the console and vacuum the dbs during its run:

Code:

#!/bin/sh

DBDIR="/tmp/.diag"

dbs="wifi_detect.db stainfo.db eth_detect.db net_detect.db sys_detect.db"

echo ""

echo "********************************************************************************"

echo "***** Running Clean Dbs *****"

echo "********************************************************************************"

echo ""

### List all tmpfs mounts and their sizes

echo ""

echo " TMPFS Sizes (Before):"

df -h | grep -Ew 'Filesystem|tmpfs'

echo ""

echo " Free (Before):"

free

echo ""

echo "Cleaning DBs:"

echo ""

for db in ${dbs}

do

echo "${db} count before delete"

/usr/sbin/sqlite3 $DBDIR/$db 'select count() FROM DATA_INFO;'

/usr/sbin/sqlite3 $DBDIR/$db 'delete from DATA_INFO WHERE strftime("%s","now") - data_time > 300;vacuum;'

echo "${db} count after delete"

/usr/sbin/sqlite3 $DBDIR/$db 'select count() FROM DATA_INFO;'

done

echo ""

echo "DBs cleaned"

### List tmp/.diag files and their sizes

echo " File Sizes:"

ls -lh /tmp/.diag

### List all tmpfs mounts and their sizes

echo ""

echo " TMPFS Sizes (After):"

df -h | grep -Ew 'Filesystem|tmpfs'

echo ""

echo " Free (After):"

free

echo ""

echo "********************************************************************************"

echo "********************************************************************************"

echo ""GHog

Regular Contributor

I discovered something over the last week that I think might solve the out of memory issues. My memory was slowly decreasing on my mesh network over time and I began to wonder what the cause was. I believe it`s caused by bufferbloat. What I did was to go into QOS and in the CAKE QOS I made sure it was setup for VDSL2 and PPOE. I then logged out and did a bufferbloat test and found it to be failing. So back into router and this time I went into CAKE and unchecked the automatic bandwidth setting. I followed the 95% of max bandwidth and stated tuning download and upstream values. Over a couple of days I got everything dialed and finally got an A+ rating for bufferbloat. What I noticed after that over a couple of days, my memory usage actually went down and now it is stable at about 55-60%. When I turn QOS of, it starts to creep up like it did before. I would try this for dsl, and fiber to see if this solves out of memory with your router. This was an eye opener.

Mrs_Esterhouse

Occasional Visitor

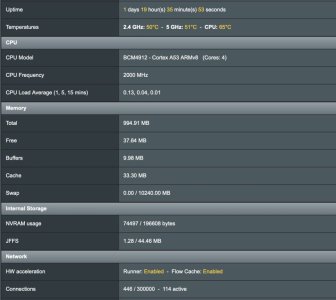

Where are these charts located in Merlin, or this a plugin?Odd thing is I had this same setup on an AC56U and it worked fine, and also on this AX68U on prior firmware, it never used the swap file before.

I enabled the swap file again just so it can keep working if it needs it, removed those two scripts you suggested.

Memory looks about the same with those two removed and rebooting but maybe not running the speed test will help. I did have the speed test only running once a day, I think the default was much more often. I will keep an eye on it and see if it starts swapping again.

View attachment 46457

Also, NVRAM and JFFS has always had plenty of free space, so I assume all the errors are related to RAM even though it is complaining about writing files.

View attachment 46458

jtp10181

Senior Member

Its an addon-script "scmerlin", seems to be pretty light weight and has some handy stuff on it. I just went through and removed all the add on scripts I dont need and this was one I kept.Where are these charts located in Merlin, or this a plugin?

jtp10181

Senior Member

So I have been wanting to figure something out to monitor the RAM/CPU for a while and this was more motivation. I had tried Netdata before but it was just too intense for the router, and complicated to setup. It does have the advantage of being standalone (you don't need any other systems to watch it). I did find another solution recently, node_exporter in conjunction with a Prometheus database and a Grafana dashboard hosted on a local mini-PC or SBC. It still is taking up about 14Mb of resident memory according to htop, but otherwise seems to be pretty lightweight.

I roughly followed this guide: https://www.jeffgeerling.com/blog/2022/monitoring-my-asus-rt-ax86u-router-prometheus-and-grafana

There is a pre-made dashboard which worked pretty well out of the box and I just moved a few things around for my own preferences.

I did find that the newest node_exporter 1.5.0 works, with one small error, the comments in the article have a solution to add --no-collector.netdev.netlink to the arguments. I also changed the port to 9102 so as not to conflict with the ASUS print server (which I am not using but might as well avoid it). He did not explain why he made a watchdog type of cronjob, but I found without it the node_exporter eventually dies for some reason, the cron job will start it again is it dies.

Example, the break in the middle is a reboot, I wanted to see what it would look like with a fresh start.

You can see the used RAM dropped, and also it looks like /tmp dropped after reboot as well which is expected.

Something with the load averages does not seem to work quite right but I have not figured that out yet.

I roughly followed this guide: https://www.jeffgeerling.com/blog/2022/monitoring-my-asus-rt-ax86u-router-prometheus-and-grafana

There is a pre-made dashboard which worked pretty well out of the box and I just moved a few things around for my own preferences.

I did find that the newest node_exporter 1.5.0 works, with one small error, the comments in the article have a solution to add --no-collector.netdev.netlink to the arguments. I also changed the port to 9102 so as not to conflict with the ASUS print server (which I am not using but might as well avoid it). He did not explain why he made a watchdog type of cronjob, but I found without it the node_exporter eventually dies for some reason, the cron job will start it again is it dies.

Example, the break in the middle is a reboot, I wanted to see what it would look like with a fresh start.

You can see the used RAM dropped, and also it looks like /tmp dropped after reboot as well which is expected.

Something with the load averages does not seem to work quite right but I have not figured that out yet.

Last edited:

djwazzup06

New Around Here

Finally found a forum that relates to my problem, so I created an account jsut to post here! Awesome work, collectively, by the way. You all were spot on.

Here's some quick detail about my router.

After finding this post a few weeks ago, I've been monitoring my resource consumption. My culprit is the DB files ever growing in tmpfs. So after I was positive this was the problem, I ran the clean-dbs.sh script posted by @lamentary. This was my output:

Note the "Error: database or disk is full" and the main contributor stainfo.db is still at large. I don't know a thing about SQL/mysql/sqlite.

So am I "too far gone" and just need to reboot the router and properly maintain these DB files with the cronjob?

Here's some quick detail about my router.

Code:

ZenWiFi_XD6 armv7l

BusyBox v1.24.1

Firmware Version:3.0.0.4.388_21380After finding this post a few weeks ago, I've been monitoring my resource consumption. My culprit is the DB files ever growing in tmpfs. So after I was positive this was the problem, I ran the clean-dbs.sh script posted by @lamentary. This was my output:

Code:

********************************************************************************

***** Running Clean Dbs *****

********************************************************************************

TMPFS Sizes (Before):

Filesystem Size Used Available Use% Mounted on

tmpfs 250.0M 284.0K 249.7M 0% /var

tmpfs 250.0M 64.3M 185.6M 26% /tmp/mnt

tmpfs 250.0M 64.3M 185.6M 26% /tmp/mnt

tmpfs 250.0M 64.3M 185.6M 26% /tmp

Free (Before):

total used free shared buffers cached

Mem: 511928 435500 76428 66372 18820 112564

-/+ buffers/cache: 304116 207812

Swap: 0 0 0

Cleaning DBs:

wifi_detect.db count before delete

65536

wifi_detect.db count after delete

20

stainfo.db count before delete

325056

Error: database or disk is full

stainfo.db count after delete

325056

eth_detect.db count before delete

65536

eth_detect.db count after delete

20

net_detect.db count before delete

32768

net_detect.db count after delete

10

sys_detect.db count before delete

32768

sys_detect.db count after delete

10

DBs cleaned

File Sizes:

-rw-rw-rw- 1 root root 20.0K Feb 5 03:51 channel_change.db

-rw-rw-rw- 1 root root 20.0K Feb 16 13:47 eth_detect.db

-rw-rw-rw- 1 root root 20.0K Feb 16 13:47 net_detect.db

-rw-rw-rw- 1 root root 20.0K Feb 16 04:59 port_status_change.db

-rw-rw-rw- 1 root root 40.5M Feb 16 13:47 stainfo.db

-rw-rw-rw- 1 root root 20.0K Feb 15 01:09 stainfo_stable.db

-rw-rw-rw- 1 root root 20.0K Feb 16 13:47 sys_detect.db

-rw-rw-rw- 1 root root 20.0K Feb 5 03:51 sys_setting.db

-rw-rw-rw- 1 root root 20.0K Feb 16 13:47 wifi_detect.db

-rw-rw-rw- 1 root root 64.0K Feb 16 07:56 wifi_dfs.db

-rw-rw-rw- 1 root root 20.0K Feb 5 03:51 wifi_setting.db

TMPFS Sizes (After):

Filesystem Size Used Available Use% Mounted on

tmpfs 250.0M 284.0K 249.7M 0% /var

tmpfs 250.0M 43.3M 206.7M 17% /tmp/mnt

tmpfs 250.0M 43.3M 206.7M 17% /tmp/mnt

tmpfs 250.0M 43.3M 206.7M 17% /tmp

Free (After):

total used free shared buffers cached

Mem: 511928 415552 96376 44772 19200 91812

-/+ buffers/cache: 304540 207388

Swap: 0 0 0Note the "Error: database or disk is full" and the main contributor stainfo.db is still at large. I don't know a thing about SQL/mysql/sqlite.

So am I "too far gone" and just need to reboot the router and properly maintain these DB files with the cronjob?

jtp10181

Senior Member

Did you try running it multiple times? Might not be enough disk space for it to shuffle stuff and clean it up I am thinking.

I think you could also just delete that file if you do not want to reboot, it will get created again.

Using a cron job should help keep them cleaned up so you don't get to that point anymore.

I think you could also just delete that file if you do not want to reboot, it will get created again.

Using a cron job should help keep them cleaned up so you don't get to that point anymore.

djwazzup06

New Around Here

Oh, yep! Running it again was able to clean stainfo.db. I setup the cronjob now to run every minute and will continue to monitor.

Thank you!

Thank you!

I was also seeing this problem on my new AX86U_Pro under 388.1. I've just resorted to scheduling reboots every other day, and removed Diversion and YazFi from the router and switched to AdGuard Home running externally on a raspi, which seems to have greatly helped. Did run the db script a few times and it seemed to help at first when it was bad, but since I've started the regular reboots and external ad blocking, hasn't been needed.

One thing of note with the script: does anyone know whether the 86U_Pro would be different to other models with regards the stainfo database?

When I run the script I get the following output for stainfo:

One thing of note with the script: does anyone know whether the 86U_Pro would be different to other models with regards the stainfo database?

When I run the script I get the following output for stainfo:

Code:

stainfo.db count before delete

Error: no such table: DATA_INFO

Error: no such table: DATA_INFO

stainfo.db count after delete

Error: no such table: DATA_INFOLooks like you're in the same boat as @mmacedo in that you don't have the

To be clear, the script will show those errors and then just keep running, so it shouldn't cause any real issues, but I've gone ahead and added a conditional check to it to verify the file exists.

@jtp10181, thanks for linking things in the first post, would you mind using this as the updated script as you find time?

stainfo.db file, @JimbobJay.To be clear, the script will show those errors and then just keep running, so it shouldn't cause any real issues, but I've gone ahead and added a conditional check to it to verify the file exists.

@jtp10181, thanks for linking things in the first post, would you mind using this as the updated script as you find time?

Code:

#!/bin/sh

DBDIR="/tmp/.diag"

dbs="wifi_detect.db stainfo.db eth_detect.db net_detect.db sys_detect.db"

echo ""

echo "********************************************************************************"

echo "***** Running Clean Dbs *****"

echo "********************************************************************************"

echo ""

### List all tmpfs mounts and their sizes

echo ""

echo " TMPFS Sizes (Before):"

df -h | grep -Ew 'Filesystem|tmpfs'

echo ""

echo " Free (Before):"

free

echo ""

echo "Cleaning DBs:"

echo ""

for db in ${dbs}

do

if [[ -f "$DBDIR/$db" ]]; then

echo "${db} count before delete"

/usr/sbin/sqlite3 $DBDIR/$db 'select count() FROM DATA_INFO;'

/usr/sbin/sqlite3 $DBDIR/$db 'delete from DATA_INFO WHERE strftime("%s","now") - data_time > 300;vacuum;'

echo "${db} count after delete"

/usr/sbin/sqlite3 $DBDIR/$db 'select count() FROM DATA_INFO;'

fi

done

echo ""

echo "DBs cleaned"

### List tmp/.diag files and their sizes

echo ""

echo " File Sizes:"

ls -lh /tmp/.diag

### List all tmpfs mounts and their sizes

echo ""

echo " TMPFS Sizes (After):"

df -h | grep -Ew 'Filesystem|tmpfs'

echo ""

echo " Free (After):"

free

echo ""

echo "Process resources:"

ps -w | grep -E 'networkmap|PID|conn_diag|sysstate' | grep -v grep

echo ""

echo "********************************************************************************"

echo "********************************************************************************"

echo ""

Last edited:

Yeah, it seems that the set of *.db files is not going to be always the same in all routers, and apparently not all *.db files will exist on the same router all the time. I don't know what determines when or which specific *.db file gets created and under what circumstances.Looks like you are in the same boat as @mmacedo, in that for whatever reason, you don't havestainfo.db, @JimbobJay. If you remove it from thedbsarray (~line 4), you won't get those errors.

To be clear, those errors shouldn't cause any problems, per se, they are just indicating that you don't have the SQLite file for stainfo.db.

If you want to remove it, the dbs variable should look like this:

Code:dbs="wifi_detect.db eth_detect.db net_detect.db sys_detect.db"

For example, the following screenshot shows the current DB files on an RT-AX86S router:

Notice that it doesn't have the "stainfo.db" and "stainfo_stable.db" files that other users have shown, but it does have a "port_status_usb_change.db" file that others don't have.

BTW, since my cousin's RT-AX86S router was having the same problem with some *.db files getting too big, I took your original script (thank you for that) and made several changes so that it finds only the *.db files that actually exist in the "/tmp/.diag" folder, and then it checks for file size larger than 64KB; when found, it goes ahead and does the trimming of the DB file for data that's older than 30 minutes. This configuration is working well for my cousin's use case (he's running the script every hour as a cron job - no need to run it more often). The modified script also reports the file size just before and right after the trimming process; in addition, it creates a log file of all the results in the "/tmp/var/tmp" folder. If you manually run the script from its location (e.g. cd /jffs/scripts), it will also output the results to the terminal window.

Attached below is a sample log file (CleanDBFiles.log.txt) from an early run of the script.

If you're interested in taking a look at the modified version of the script, it's available in PasteBin:

Bash:

mkdir -m 755 -p /jffs/scripts

curl -kLSs --retry 3 --retry-delay 5 --retry-connrefused pastebin.com/raw/yfqjSMzp | tr -d '\r' > /jffs/scripts/CleanDBFiles.sh

chmod 755 /jffs/scripts/*.shMy 2 cents.

Attachments

Great work, @Martinski!Yeah, it seems that the set of *.db files is not going to be always the same in all routers, and apparently not all *.db files will exist on the same router all the time. I don't know what determines when or which specific *.db file gets created and under what circumstances.

For example, the following screenshot shows the current DB files on an RT-AX86S router:

View attachment 48041

Notice that it doesn't have the "stainfo.db" and "stainfo_stable.db" files that other users have shown, but it does have a "port_status_usb_change.db" file that others don't have.

BTW, since my cousin's RT-AX86S router was having the same problem with some *.db files getting too big, I took your original script (thank you for that) and made several changes so that it finds only the *.db files that actually exist in the "/tmp/.diag" folder, and then it checks for file size larger than 64KB; when found, it goes ahead and does the trimming of the DB file for data that's older than 30 minutes. This configuration is working well for my cousin's use case (he's running the script every hour as a cron job - no need to run it more often). The modified script also reports the file size just before and right after the trimming process; in addition, it creates a log file of all the results in the "/tmp/var/tmp" folder. If you manually run the script from its location (e.g. cd /jffs/scripts), it will also output the results to the terminal window.

Attached below is a sample log file (CleanDBFiles.log.txt) from an early run of the script.

If you're interested in taking a look at the modified version of the script, it's available in PasteBin:

For the customizable parameters, see the "CUSTOMIZABLE PARAMETERS SECTION" near the top of the script.Bash:mkdir -m 755 -p /jffs/scripts curl -kLSs --retry 3 --retry-delay 5 --retry-connrefused pastebin.com/raw/yfqjSMzp | tr -d '\r' > /jffs/scripts/CleanDBFiles.sh chmod 755 /jffs/scripts/*.sh

My 2 cents.

alan6854321

Senior Member

Hi there,

I've been keeping an eye on this thread as I was getting sporadic reboots on my AX86S running 388.1, I went back to 386_7.2 in the end.

I've recently loaded the latest beta - 388.2_beta1, The router runs with about 32MB free mem, it has a 2Gb swap file but I've never seen it used.

It's been running for a couple of days now without problems, but I've just noticed that the files in /tmp/.diag seem to have some kind of garbage collection going on.

I don't know if it's size-based or a regular job but stainfo.db, for example, seems to grow to around 1600000 before getting chopped down to around 800000.

Maybe ASUS stole your idea?

I've been keeping an eye on this thread as I was getting sporadic reboots on my AX86S running 388.1, I went back to 386_7.2 in the end.

I've recently loaded the latest beta - 388.2_beta1, The router runs with about 32MB free mem, it has a 2Gb swap file but I've never seen it used.

It's been running for a couple of days now without problems, but I've just noticed that the files in /tmp/.diag seem to have some kind of garbage collection going on.

I don't know if it's size-based or a regular job but stainfo.db, for example, seems to grow to around 1600000 before getting chopped down to around 800000.

Maybe ASUS stole your idea?

Hi there,

I've been keeping an eye on this thread as I was getting sporadic reboots on my AX86S running 388.1, I went back to 386_7.2 in the end.

I've recently loaded the latest beta - 388.2_beta1, The router runs with about 32MB free mem, it has a 2Gb swap file but I've never seen it used.

It's been running for a couple of days now without problems, but I've just noticed that the files in /tmp/.diag seem to have some kind of garbage collection going on.

I don't know if it's size-based or a regular job but stainfo.db, for example, seems to grow to around 1600000 before getting chopped down to around 800000.

Maybe ASUS stole your idea?

I have same/SIMILAR issue, do you run AGH?

Attachments

alan6854321

Senior Member

No, I have very few add-ons: YazDHCP, scMerlin, mosquitto, openssh-sftp-serverI have same/SIMILAR issue, do you run AGH?

I had exacly the same thing over here. I went back to 386_5.2 and treid the newer firmware. But this one was also not stable with my router. So I went back again...Hi there,

I've been keeping an eye on this thread as I was getting sporadic reboots on my AX86S running 388.1, I went back to 386_7.2 in the end.

I've recently loaded the latest beta - 388.2_beta1, The router runs with about 32MB free mem, it has a 2Gb swap file but I've never seen it used.

It's been running for a couple of days now without problems, but I've just noticed that the files in /tmp/.diag seem to have some kind of garbage collection going on.

I don't know if it's size-based or a regular job but stainfo.db, for example, seems to grow to around 1600000 before getting chopped down to around 800000.

Maybe ASUS stole your idea?

Similar threads

- Replies

- 6

- Views

- 2K

- Replies

- 32

- Views

- 9K

Similar threads

Similar threads

-

-

-

Let's Encrypt defaults to ECDSA - Revert to RSA to avoid IPsec Errors?

- Started by DigitizedMe

- Replies: 1

-

-

-

-

Update Merlin website to show which devices are compatible with Merlin 388 and 3006?

- Started by maxbraketorque

- Replies: 3

-

-

Solved Proton VPN got off the grid after several tries to upgrade from 3004.388.10 to 3004.388.10_2 and back.

- Started by user_20240830

- Replies: 17

-

RT-AX86U – AiProtection + QNAP HybridSync Mount Failures After Updating to 3004.388.10 (Resolved by Downgrade to 388.9)

- Started by themilfalcon

- Replies: 14

Latest threads

-

Everything was great, until it wasn't. RT-BE88U wifi issues.

- Started by The_Bishop

- Replies: 0

-

-

-

-

Support SNBForums w/ Amazon

If you'd like to support SNBForums, just use this link and buy anything on Amazon. Thanks!

Sign Up For SNBForums Daily Digest

Get an update of what's new every day delivered to your mailbox. Sign up here!

Members online

Total: 2,134 (members: 8, guests: 2,126)